Translate-to-Recognize Networks

Pytorch implementations of Translate-to-Recognize Networks for RGB-D Scene Recognition (CVPR 2019).

Usage

- Download Reset18 pre-trained on Places dataset if necessary.

- Data processing.

- We use ImageFolder format, i.e., [class1/images.., class2/images..], to store the data, use util.splitimages.py to help change the format if neccessary.

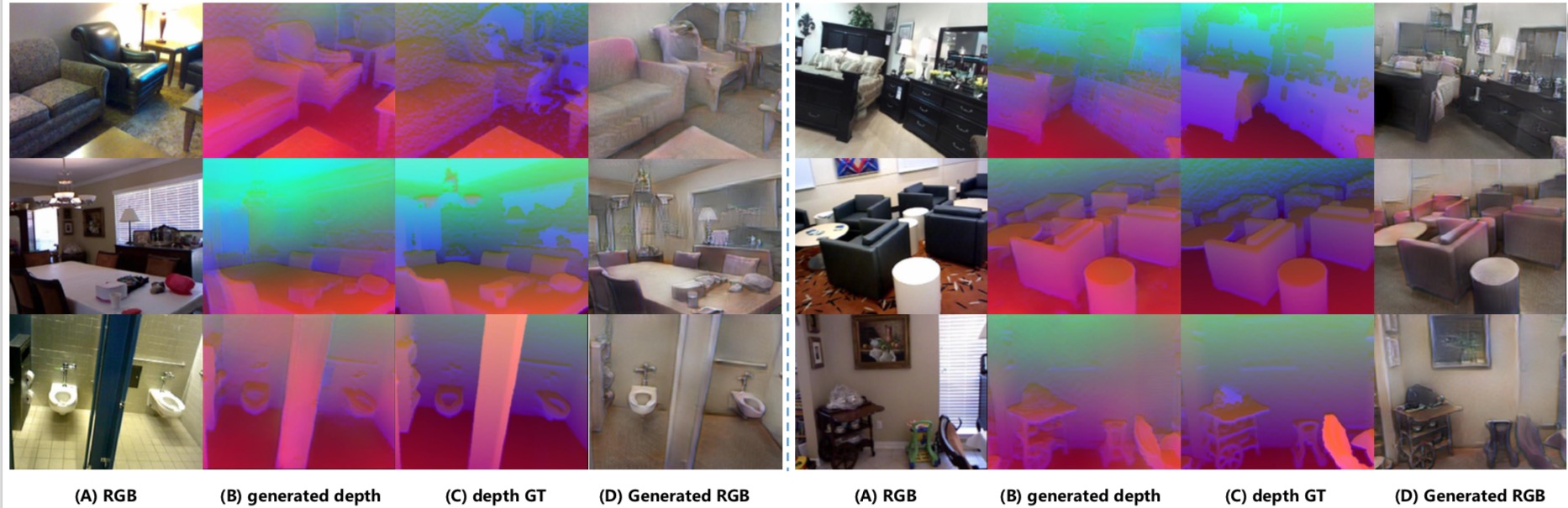

- Use util.conc_modalities.py to concatenate each paired RGB and depth images to one image for more efficient data loading. An example is shown below(depth data is encoded using HHA format).

- We provide links to download SUN RGB-D data in ImageFolder format and depth data has been encoded using HHA format.

- Configuration.

Almost all the settings of experiments are configurable by the files in the config package. - Train.

python train.pyorbash train.sh - [

!!New!!] New branch ‘multi-gpu’ has been uploaded, making losses calculated on each gpu for better balanced usage of multi gpus. You could use this version using this command: \git clone -b multi-gpu https://github.com/ownstyledu/Translate-to-Recognize-Networks.git TrecgNet - [

!!New!!] In multi-gpu brach, we add more loss types in the training, e.g., GAN, pixel2pixel intensity. You could easily add these losses by modifying the config file. - [!!New 2019.7.31!!] We added the fusion model, and reconstructed the code, including reusing the base model and making some modifications on decoder structure and config file.

Due to the time limitation, we don’t simultaneously update the multi-gpu branch. But you could still refer to it if you want.

Development Environment

- NVIDIA TITAN XP

- cuda 9.0

- python 3.6.5

- pytorch 0.4.1

- torchvision 0.2.1

- tensorboardX

Citation

Please cite the following paper if you feel this repository useful.

@inproceedings{du2019translate,

title={Translate-to-Recognize Networks for RGB-D Scene Recognition},

author={Du, Dapeng and Wang, Limin and Wang, Huiling and Zhao, Kai and Wu, Gangshan},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={11836--11845},

year={2019}

}